Seedance2.0

Four input modalities. Native audio-visual sync. Cinematic camera reproduction. Multi-shot storytelling with extreme character consistency — all at up to 2K resolution.

2,182,705+ happy users

See Seedance 2.0 in Action

Real outputs generated by Seedance 2.0 — from cinematic one-take tracking shots to audio-synced narratives and multi-character scenes.

One-Take Tracking Shot

Continuous camera following subjects through multiple urban locations

Cinematic with Native Audio

Sunset cinematography with vintage car, foley and ambient sound

Action Scene Compositing

Fight scene under starry sky with dust effects and dynamic camera

Emotional Narrative

Story generated from image and audio references with character expression

Character Replacement

Band performance with seamless character swap while preserving motion

Multi-Shot Extension

Scene extended across multiple shots with consistent characters

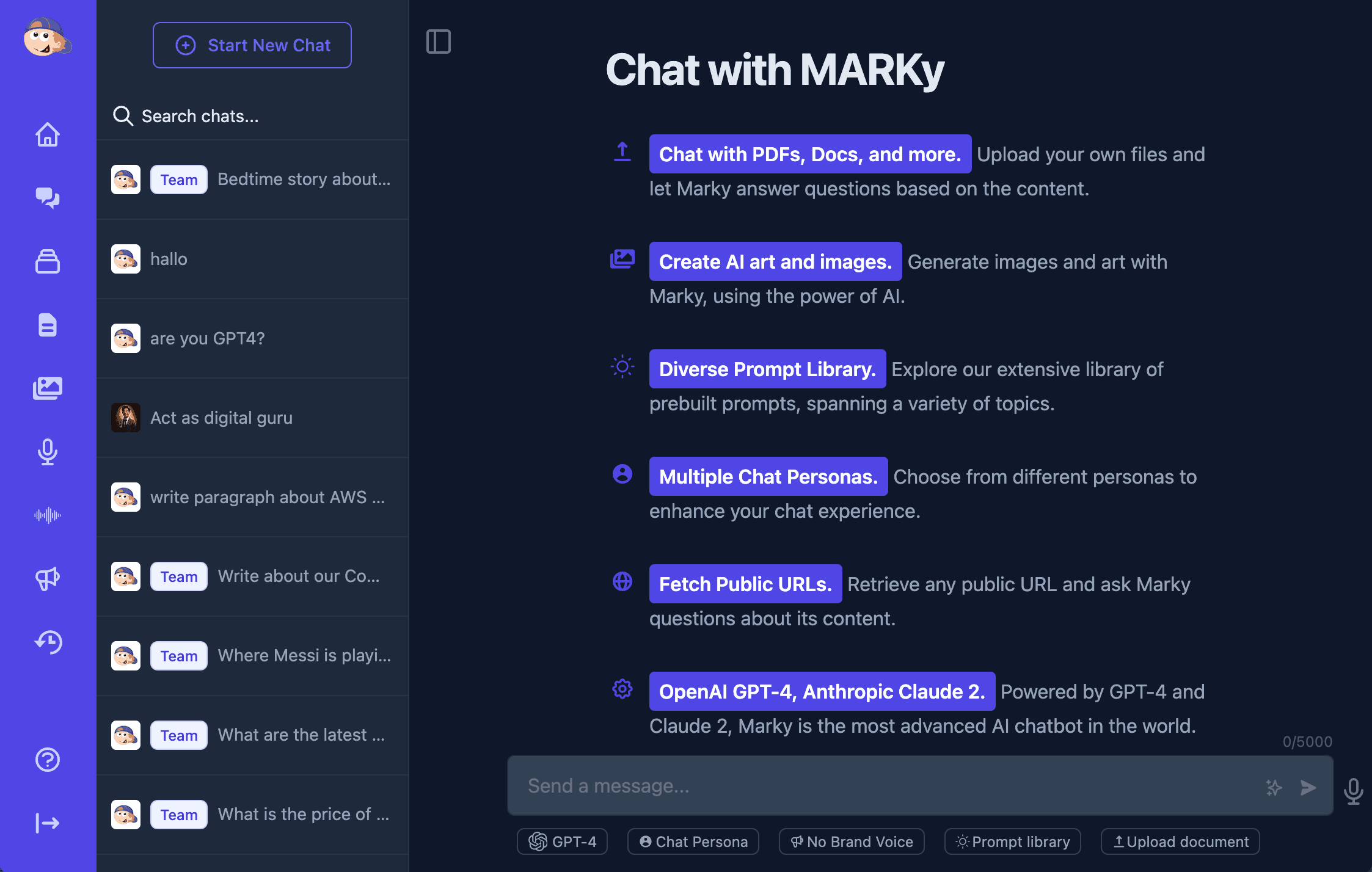

Four Ways to Create

Seedance 2.0 accepts text, images, video references, and audio as inputs — individually or combined for maximum creative control.

Text to Video

Describe any scene in natural language and Seedance 2.0 brings it to life with synchronized dialogue, foley, and ambient audio — all generated natively.

- Detailed scene & action control via prompts

- Native audio generation (dialogue + SFX + ambience)

- All 6 aspect ratios supported

- 4–12 second output duration

Image to Video

Upload a still image and watch it animate with realistic motion while preserving every detail — facial features, clothing, background, and lighting.

- Identity-preserving animation

- Use up to 9 reference images for characters & scenes

- Automatic camera movement generation

- Consistent style across frames

Video Reference

Upload reference videos to extract camera movements, character motion, and timing. Seedance 2.0 intelligently reproduces cinematic techniques without complex prompting.

- Camera trajectory extraction & reproduction

- Facial expression transfer from reference footage

- Motion duplication with new characters/scenes

- Up to 3 reference videos (15s total)

Audio-Driven Video

Use voiceovers, soundtracks, or narration as the primary control signal. The model generates visuals that match the rhythm, emotion, and timing of your audio.

- Beat-matched visual generation

- Lip-sync from uploaded voiceover

- Music-driven scene transitions

- Up to 3 audio files (15s total)

Up to 12 Files. One Generation.

Previously, getting complex camera movements or character-consistent multi-scene videos required writing elaborate prompts. With Seedance 2.0, you simply upload reference files and the model intelligently extracts what it needs.

Hollywood Camera Techniques

Describe camera movements in your prompt or upload a reference video — Seedance 2.0 extracts and reproduces professional cinematography techniques automatically.

Hitchcock Zoom

The classic dolly-zoom effect that creates a disorienting perspective shift — perfect for dramatic reveals and protagonist panic moments.

Tracking Shots

Follow subjects through environments with smooth rear, side, and frontal tracking. Low-angle and high-angle variants supported.

Circling / Orbit

Multi-angle orbiting around subjects with natural acceleration and deceleration. Create dynamic 180° and 360° reveals.

Crane & Boom

Vertical camera movements that sweep up from ground level or descend from aerial perspectives with fluid motion.

Pan & Tilt

Precise degree-based panning (90°, 180°) with halt-and-resume control. Follow subject gaze direction naturally.

Push / Pull Zoom

Smooth zoom into close-ups or pull back to reveal wider scenes. Control pacing of the zoom for dramatic or subtle effect.

Native Audio-Visual Synchronization

Unlike other models that add audio as a post-processing step, Seedance 2.0 generates high-fidelity audio as part of the core generation pipeline. Three intelligent audio layers are synchronized with visual content at the frame level.

- Dialogue — Phoneme-level lip-sync in 8+ languages

- Foley — Action-matched sound effects (footsteps, impacts, doors)

- Ambience — Environmental audio (wind, crowds, rain, traffic)

- Beat matching — Audio-visual rhythm synchronization for music

Lip-synced speech · 8+ languages · Phoneme-level accuracy

Footsteps · Impacts · Object interactions · Environmental SFX

Background atmosphere · Weather · Crowds · Spatial audio

How It Works

Choose Your Inputs

Start with a text prompt, upload reference images for characters and scenes, add reference videos for camera movements, or provide audio for lip-sync and beat matching.

Configure Output

Select your aspect ratio (16:9, 9:16, 1:1, etc.), video duration (4–12 seconds), and desired visual style — from photorealistic to anime to film noir.

Reference with @Tags

In your prompt, use @Image1, @Image2, @Video1 notation to tell the model exactly how each reference file should influence the output.

Generate & Iterate

Seedance 2.0 processes your multimodal inputs and generates video with synchronized audio. Refine your prompt or swap references to iterate.

Tips for Better Results

Get the most out of Seedance 2.0 with these prompting techniques from power users and the official documentation.

Use Camera Language

Include specific cinematography terms: "Hitchcock zoom", "tracking shot following the subject", "slow 180° pan", "low-angle crane rising". Seedance 2.0 understands professional film vocabulary.

“A man in a dark suit walks through a neon-lit corridor. Camera starts with a rear tracking shot, then transitions to a circling orbit as he reaches the elevator.”

Reference Your Uploads

Use @Image and @Video notation in prompts to bind specific files to roles. Assign images to characters, locations, or style references, and videos to camera or motion references.

“@Image1 is the main character. @Image2 is the office location. @Video1 provides the camera movement. The character sits at a desk, picks up a phone, and looks out the window.”

Describe Emotion & Motion

Go beyond visual descriptions. Include emotional states, breathing patterns, micro-expressions, and body language for more realistic character animation.

“The woman looks up from her book, eyes widening with surprise. She stands slowly, her hands trembling slightly, and takes a hesitant step forward.”

Layer Audio Direction

Describe the soundscape you want: dialogue content, ambient sounds, and action-specific foley. The model generates all three audio layers natively.

“A busy cafe scene. Background chatter and clinking cups. A barista calls out an order. Rain patters against the window. The protagonist sighs and stirs their coffee.”

Any Aesthetic You Can Imagine

Specify the visual style in your prompt or let the model infer it from reference images.

Technical Details

2.0 vs 1.5 Pro

Every dimension improved — resolution, audio, inputs, speed, and creative control.

| Feature | Seedance 2.0 | Seedance 1.5 Pro |

|---|---|---|

| Resolution | Up to 2K | 1080p |

| Native Audio | Dialogue + Foley + Ambience | Basic audio sync |

| Lip-Sync | 8+ languages, phoneme-level | Limited language support |

| Input Modalities | Text + Image + Video + Audio | Text + Image only |

| Max Input Files | 12 files simultaneously | 1–2 files |

| Character Consistency | Extreme — multi-shot IP continuity | Good — single shot |

| Multi-Shot Storytelling | Yes — auto scene transitions | Not supported |

| Camera Control | Reference video extraction | Prompt-only |

| Video Editing | Natural language editing | Not supported |

| Generation Speed | 30% faster | Baseline |

What You Can Create

Social Media Content

Create scroll-stopping videos for TikTok, Instagram Reels, and YouTube Shorts. Native audio generation means your content is ready to post — no audio editing needed.

Product Marketing

Generate cinematic product reveals with professional camera movements. Upload a product photo, add a reference video for the camera technique, and get a polished commercial.

Short Films & Narratives

Produce multi-shot story sequences with consistent characters across scenes. Automatic transitions and character identity persistence enable episodic content creation.

Educational Content

Create engaging explainers with synchronized voiceover lip-sync in 8+ languages. Upload narration audio and let the model generate matching visuals.

Music Videos

Generate beat-matched visuals from audio input. The model synchronizes scene transitions, character movement, and camera cuts to the rhythm of your music.

Brand Storytelling

Build episodic content series with extreme character consistency. Your brand mascot, spokesperson, or product maintains identity across every shot.

Film Pre-visualization

Use reference videos to prototype complex camera movements before actual production. Test Hitchcock zooms, crane shots, and orbit sequences virtually.

Multilingual Content

Generate the same video with lip-sync in English, Mandarin, Korean, Japanese, Spanish, Indonesian, and more — from a single prompt with different audio.

Frequently Asked Questions

Everything you need to know about Seedance 2.0

What is Seedance 2.0?

Seedance 2.0 is ByteDance's next-generation AI video model. It generates video with native audio (dialogue, foley, and ambience) from four input types: text, images, video references, and audio. It outputs up to 2K resolution with extreme character consistency and professional camera techniques.

What makes Seedance 2.0 different from other AI video models?

Three key differentiators: (1) Native audio-visual generation — audio isn't post-processed but generated jointly with video, enabling true lip-sync and beat matching. (2) Reference video input — upload existing videos to extract and reproduce camera movements and character motion without complex prompting. (3) Multi-shot storytelling with extreme character consistency across scenes.

How does the multimodal input system work?

You can combine up to 12 files: up to 9 images (for characters, locations, style references), up to 3 video clips (for camera movements and motion references, 15s total), and up to 3 audio files (for voiceover and music, 15s total). Use @Image1, @Video1 notation in your prompt to assign roles to each file.

What camera techniques does Seedance 2.0 support?

Seedance 2.0 understands professional cinematography language including: Hitchcock zoom (dolly-zoom), tracking shots (rear, side, frontal), orbiting/circling shots, crane and boom movements, pan and tilt with precise degree control, push/pull zoom, and robotic arm multi-angle effects. You can describe these in text or upload a reference video.

What resolutions and formats are supported?

Output: up to 2K resolution (1080p standard), 4–12 second duration, 6 aspect ratios (16:9, 9:16, 4:3, 3:4, 21:9, 1:1). The model supports photorealistic, anime, 2D/3D animation, watercolor, film noir, and abstract visual styles.

How does the native audio generation work?

Seedance 2.0 generates three audio layers simultaneously with the video: Dialogue (with phoneme-level lip-sync in 8+ languages), Foley (action-matched sound effects like footsteps, impacts, and environmental interactions), and Ambience (background audio like wind, crowds, rain). All layers are synchronized with the visual content.

Can I control character appearance across multiple shots?

Yes. Seedance 2.0 features extreme character consistency — facial features, clothing details, accessories, and visual style are maintained uniformly across multi-shot narratives. Upload character reference images and the model maintains identity persistence throughout the generated sequence.

How does video reference input work?

Upload up to 3 reference videos (15s total). The model extracts camera trajectories, character motion patterns, and facial expressions from the reference footage, then applies them to your generated video with new characters and scenes. This replaces complex text-based camera direction.

What languages are supported for lip-sync?

Seedance 2.0 supports phoneme-level lip-sync in 8+ languages: English, Mandarin Chinese, Cantonese, Korean, Japanese, Spanish, Indonesian, and more. You can generate the same scene with lip-sync in different languages by changing the audio input.

How many credits does Seedance 2.0 cost?

Seedance 2.0 uses per-second pricing. Credit costs scale with duration — shorter clips cost less, longer clips cost more. Visit the video generation page for current credit rates.

Can I use Seedance 2.0 videos commercially?

Yes. Videos generated with Seedance 2.0 on Easy-Peasy.AI can be used for commercial purposes including marketing, advertising, social media, product videos, and content creation, subject to our terms of service.

How fast is Seedance 2.0 compared to previous versions?

Seedance 2.0 is 30% faster than Seedance 1.5 Pro while delivering higher resolution (up to 2K vs 1080p), more input modalities, and native multi-layer audio generation. Typical generation completes in under 60 seconds for standard clips.

Create Faster With AI.

Try it Risk-Free.

Stop wasting time and start creating high-quality content immediately with power of generative AI.